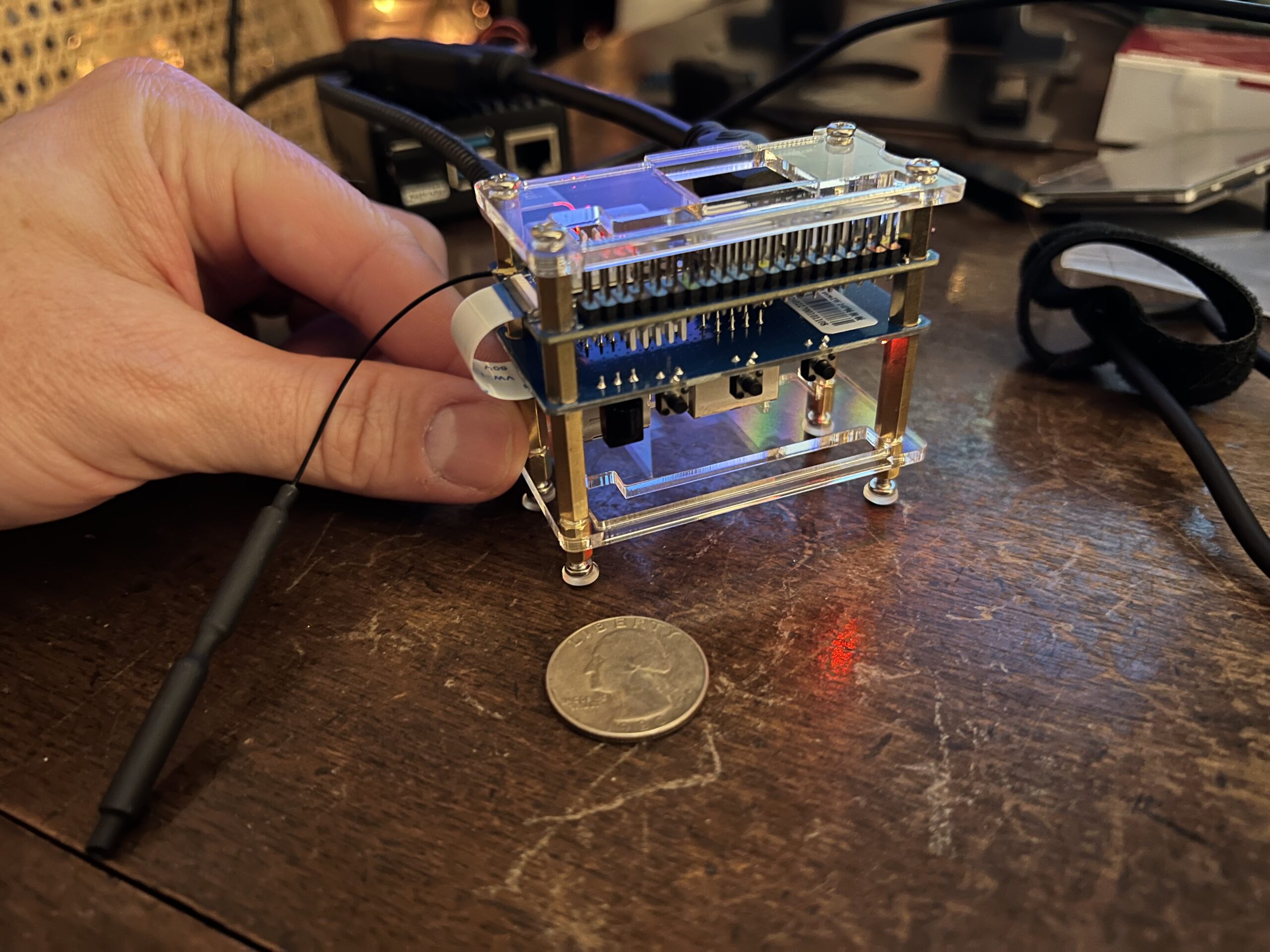

Meet Pipsqueak: A pocket-sized AI assistant powered by an Orange Pi Zero 2W and TinyLlama, proving that local LLMs don’t always need powerful hardware.

You can see how few tokens per second Pipsqueak can infer… but YAY!

The Hardware Setup

At the heart of this experiment is the Orange Pi Zero 2W, a remarkably capable single-board computer (SBC) that punches well above its weight class. Despite its diminutive size and modest $15 price tag, it packs some impressive specs:

- Allwinner H618 Quad-core ARM Cortex-A53 processor

- 4GB of DDR3 RAM (the key to making this project possible)

- Mali G31-MP2 GPU

- Compact form factor similar to Raspberry Pi Zero

The system was augmented with:

- Portable mini HDMI display

- Tiny foldable keyboard and mouse with bluetooth dongle

- Custom GPIO-mounted audio module for voice output (input possible but no stable driver available for Orange Pi systems yet)

- 32GB Sandisk Pro microSD card running Armbian Linux

The Software Stack

The choice of TinyLlama through Ollama was deliberate. Here’s why:

- TinyLlama is a 1.5GB model, leaving adequate RAM for system operations

- Ollama provides straightforward deployment on ARM architectures

- The model offers a good balance of capability vs. resource requirements

Memory Management: The Critical Factor

The 4GB of RAM on the Orange Pi Zero 2W proved to be the perfect amount for this setup. Here’s how the memory was allocated:

- ~1.5GB for TinyLlama model

- ~2GB for system resources and overhead

- Remaining ~0.5GB for active processes

This distribution ensures stable operation while maintaining enough headroom for the operating system and basic multitasking.

Performance Characteristics

Let’s be honest about the performance: Pipsqueak isn’t going to win any speed records. Inference times are notably slower than on more powerful hardware:

- Initial model loading: ~45 seconds

- Response generation: 15-20 seconds per paragraph

- Temperature management becomes important during extended use so using heat sinks is critical. Inference heats this puppy up fast!

However, the mere fact that it works at all is remarkable. The system maintains stability and can engage in continuous conversation, albeit at a leisurely pace.

Loading Ollama onto the Orange Pi Zero 2W takes a while… but it works!

Audio Integration

One of the more exciting aspects was adding voice output capabilities:

- Custom GPIO audio module installation

- Configuration of text-to-speech packages

- Integration with LLM output for voiced responses

While not necessary for the core functionality, this addition transforms Pipsqueak from a mere technical demonstration into something more engaging and interactive.

Practical Applications

This setup opens interesting possibilities for edge computing and local AI:

- Offline AI assistant for basic tasks

- Educational tool for understanding LLMs

- Platform for experimenting with model optimization

- Proof of concept for low-power AI applications

- Tiny LLM integration into everyday items is the future (my blender recommends smoothie recipes!)

Lessons Learned

Several key insights emerged from this project:

- RAM is more critical than processing power for running small LLMs

- The Orange Pi Zero 2W’s 4GB RAM is a sweet spot for tiny language models

- Proper thermal management is essential for stable operation

- GPIO configuration for additional peripherals adds significant utility

- Linux is the bomb

Moving Forward

While Pipsqueak works as intended, several potential improvements come to mind:

- Exploring quantized models for better performance

- Utilizing a TPU board for faster inference

- Implementing better thermal management

- Adding battery power for portable operation

- Experimenting with different model architectures

Who you calling tiny?

Pipsqueak demonstrates that local AI doesn’t always require expensive hardware or cloud connectivity. While it may not replace more powerful systems, it shows that meaningful AI applications are possible on extremely modest hardware. This has important implications for education, prototyping, and regions with limited internet connectivity.

The success of running TinyLlama on a $15 computer suggests we’re entering an era where AI can truly run anywhere. The limitations are real, but they’re far outweighed by the possibilities this opens up for accessible, local AI deployment.

Remember: sometimes the most interesting discoveries come not from pushing the boundaries of what’s fastest, but from exploring what’s possible with the least.